我想做的是少样本图像目标检测,我的异物数量只有200张,我想做改进yolov11的算法,请给我一些即...

Tạo vào: 23 tháng 5, 2025

Tạo vào: 23 tháng 5, 2025

我想做的是少样本图像目标检测,我的异物数量只有200张,我想做改进yolov11的算法,请给我一些即插即用的模块或则改进yolov11的建议,我使用的是ultralytic库,我上传的图片是我直接用yolov11跑的算法结果,请进行分析,这个效果目前是怎样的,我现在的yaml文件如下:# Ultralytics AGPL-3.0 License - https://ultralytics.com/license

nc: 4 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolo11n.yaml' will call yolo11.yaml with scale 'n'

n: [0.50, 0.25, 1024] # summary: 181 layers, 2624080 parameters, 2624064 gradients, 6.6 GFLOPs

s: [0.50, 0.50, 1024] # summary: 181 layers, 9458752 parameters, 9458736 gradients, 21.7 GFLOPs

m: [0.50, 1.00, 512] # summary: 231 layers, 20114688 parameters, 20114672 gradients, 68.5 GFLOPs

l: [1.00, 1.00, 512] # summary: 357 layers, 25372160 parameters, 25372144 gradients, 87.6 GFLOPs

x: [1.00, 1.50, 512] # summary: 357 layers, 56966176 parameters, 56966160 gradients, 196.0 GFLOPs

backbone:

head:

[-1, 1, nn.Upsample, [None, 2, "nearest"]]

[[-1, 6], 1, Concat, [1]] # cat backbone P4

[-1, 2, C3k2, [512, False]] # 13

[-1, 1, nn.Upsample, [None, 2, "nearest"]]

[[-1, 4], 1, Concat, [1]] # cat backbone P3

[-1, 2, C3k2, [256, False]] # 16 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]]

[[-1, 14], 1, Concat, [1]] # cat head P4

[-1, 2, C3k2, [512, False]] # 19 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]]

[[-1, 11], 1, Concat, [1]] # cat head P5

[-1, 2, C3k2, [1024, True]] # 22 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc]] # Detect(P3, P4, P5)

我现在的conv.py代码如下# Ultralytics AGPL-3.0 License - https://ultralytics.com/license

"""Convolution modules."""

import math

import numpy as np

import torch

import torch.nn as nn

all = (

"Conv",

"Conv2",

"LightConv",

"DWConv",

"DWConvTranspose2d",

"ConvTranspose",

"Focus",

"GhostConv",

"ChannelAttention",

"SpatialAttention",

"CBAM",

"Concat",

"RepConv",

"Index",

)

def autopad(k, p=None, d=1): # kernel, padding, dilation

"""Pad to 'same' shape outputs."""

if d > 1:

k = d * (k - 1) + 1 if isinstance(k, int) else [d * (x - 1) + 1 for x in k] # actual kernel-size

if p is None:

p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-pad

return p

class Conv(nn.Module):

"""

Standard convolution module with batch normalization and activation.

textAttributes: conv (nn.Conv2d): Convolutional layer. bn (nn.BatchNorm2d): Batch normalization layer. act (nn.Module): Activation function layer. default_act (nn.Module): Default activation function (SiLU). """ default_act = nn.SiLU() # default activation def __init__(self, c1, c2, k=1, s=1, p=None, g=1, d=1, act=True): """ Initialize Conv layer with given parameters. Args: c1 (int): Number of input channels. c2 (int): Number of output channels. k (int): Kernel size. s (int): Stride. p (int, optional): Padding. g (int): Groups. d (int): Dilation. act (bool | nn.Module): Activation function. """ super().__init__() self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p, d), groups=g, dilation=d, bias=False) self.bn = nn.BatchNorm2d(c2) self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity() def forward(self, x): """ Apply convolution, batch normalization and activation to input tensor. Args: x (torch.Tensor): Input tensor. Returns: (torch.Tensor): Output tensor. """ return self.act(self.bn(self.conv(x))) def forward_fuse(self, x): """ Apply convolution and activation without batch normalization. Args: x (torch.Tensor): Input tensor. Returns: (torch.Tensor): Output tensor. """ return self.act(self.conv(x))

class Conv2(Conv):

"""

Simplified RepConv module with Conv fusing.

textAttributes: conv (nn.Conv2d): Main 3x3 convolutional layer. cv2 (nn.Conv2d): Additional 1x1 convolutional layer. bn (nn.BatchNorm2d): Batch normalization layer. act (nn.Module): Activation function layer. """ def __init__(self, c1, c2, k=3, s=1, p=None, g=1, d=1, act=True): """ Initialize Conv2 layer with given parameters. Args: c1 (int): Number of input channels. c2 (int): Number of output channels. k (int): Kernel size. s (int): Stride. p (int, optional): Padding. g (int): Groups. d (int): Dilation. act (bool | nn.Module): Activation function. """ super().__init__(c1, c2, k, s, p, g=g, d=d, act=act) self.cv2 = nn.Conv2d(c1, c2, 1, s, autopad(1, p, d), groups=g, dilation=d, bias=False) # add 1x1 conv def forward(self, x): """ Apply convolution, batch normalization and activation to input tensor. Args: x (torch.Tensor): Input tensor. Returns: (torch.Tensor): Output tensor. """ return self.act(self.bn(self.conv(x) + self.cv2(x))) def forward_fuse(self, x): """ Apply fused convolution, batch normalization and activation to input tensor. Args: x (torch.Tensor): Input tensor. Returns: (torch.Tensor): Output tensor. """ return self.act(self.bn(self.conv(x))) def fuse_convs(self): """Fuse parallel convolutions.""" w = torch.zeros_like(self.conv.weight.data) i = [x // 2 for x in w.shape[2:]] w[:, :, i[0] : i[0] + 1, i[1] : i[1] + 1] = self.cv2.weight.data.clone() self.conv.weight.data += w self.__delattr__("cv2") self.forward = self.forward_fuse

class LightConv(nn.Module):

"""

Light convolution module with 1x1 and depthwise convolutions.

textThis implementation is based on the PaddleDetection HGNetV2 backbone. Attributes: conv1 (Conv): 1x1 convolution layer. conv2 (DWConv): Depthwise convolution layer. """ def __init__(self, c1, c2, k=1, act=nn.ReLU()): """ Initialize LightConv layer with given parameters. Args: c1 (int): Number of input channels. c2 (int): Number of output channels. k (int): Kernel size for depthwise convolution. act (nn.Module): Activation function. """ super().__init__() self.conv1 = Conv(c1, c2, 1, act=False) self.conv2 = DWConv(c2, c2, k, act=act) def forward(self, x): """ Apply 2 convolutions to input tensor. Args: x (torch.Tensor): Input tensor. Returns: (torch.Tensor): Output tensor. """ return self.conv2(self.conv1(x))

class DWConv(Conv):

"""Depth-wise convolution module."""

textdef __init__(self, c1, c2, k=1, s=1, d=1, act=True): """ Initialize depth-wise convolution with given parameters. Args: c1 (int): Number of input channels. c2 (int): Number of output channels. k (int): Kernel size. s (int): Stride. d (int): Dilation. act (bool | nn.Module): Activation function. """ super().__init__(c1, c2, k, s, g=math.gcd(c1, c2), d=d, act=act)

class DWConvTranspose2d(nn.ConvTranspose2d):

"""Depth-wise transpose convolution module."""

textdef __init__(self, c1, c2, k=1, s=1, p1=0, p2=0): """ Initialize depth-wise transpose convolution with given parameters. Args: c1 (int): Number of input channels. c2 (int): Number of output channels. k (int): Kernel size. s (int): Stride. p1 (int): Padding. p2 (int): Output padding. """ super().__init__(c1, c2, k, s, p1, p2, groups=math.gcd(c1, c2))

class ConvTranspose(nn.Module):

"""

Convolution transpose module with optional batch normalization and activation.

textAttributes: conv_transpose (nn.ConvTranspose2d): Transposed convolution layer. bn (nn.BatchNorm2d | nn.Identity): Batch normalization layer. act (nn.Module): Activation function layer. default_act (nn.Module): Default activation function (SiLU). """ default_act = nn.SiLU() # default activation def __init__(self, c1, c2, k=2, s=2, p=0, bn=True, act=True): """ Initialize ConvTranspose layer with given parameters. Args: c1 (int): Number of input channels. c2 (int): Number of output channels. k (int): Kernel size. s (int): Stride. p (int): Padding. bn (bool): Use batch normalization. act (bool | nn.Module): Activation function. """ super().__init__() self.conv_transpose = nn.ConvTranspose2d(c1, c2, k, s, p, bias=not bn) self.bn = nn.BatchNorm2d(c2) if bn else nn.Identity() self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity() def forward(self, x): """ Apply transposed convolution, batch normalization and activation to input. Args: x (torch.Tensor): Input tensor. Returns: (torch.Tensor): Output tensor. """ return self.act(self.bn(self.conv_transpose(x))) def forward_fuse(self, x): """ Apply activation and convolution transpose operation to input. Args: x (torch.Tensor): Input tensor. Returns: (torch.Tensor): Output tensor. """ return self.act(self.conv_transpose(x))

class Focus(nn.Module):

"""

Focus module for concentrating feature information.

textSlices input tensor into 4 parts and concatenates them in the channel dimension. Attributes: conv (Conv): Convolution layer. """ def __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): """ Initialize Focus module with given parameters. Args: c1 (int): Number of input channels. c2 (int): Number of output channels. k (int): Kernel size. s (int): Stride. p (int, optional): Padding. g (int): Groups. act (bool | nn.Module): Activation function. """ super().__init__() self.conv = Conv(c1 * 4, c2, k, s, p, g, act=act) # self.contract = Contract(gain=2) def forward(self, x): """ Apply Focus operation and convolution to input tensor. Input shape is (b,c,w,h) and output shape is (b,4c,w/2,h/2). Args: x (torch.Tensor): Input tensor. Returns: (torch.Tensor): Output tensor. """ return self.conv(torch.cat((x[..., ::2, ::2], x[..., 1::2, ::2], x[..., ::2, 1::2], x[..., 1::2, 1::2]), 1)) # return self.conv(self.contract(x))

class GhostConv(nn.Module):

"""

Ghost Convolution module.

textGenerates more features with fewer parameters by using cheap operations. Attributes: cv1 (Conv): Primary convolution. cv2 (Conv): Cheap operation convolution. References: https://github.com/huawei-noah/Efficient-AI-Backbones """ def __init__(self, c1, c2, k=1, s=1, g=1, act=True): """ Initialize Ghost Convolution module with given parameters. Args: c1 (int): Number of input channels. c2 (int): Number of output channels. k (int): Kernel size. s (int): Stride. g (int): Groups. act (bool | nn.Module): Activation function. """ super().__init__() c_ = c2 // 2 # hidden channels self.cv1 = Conv(c1, c_, k, s, None, g, act=act) self.cv2 = Conv(c_, c_, 5, 1, None, c_, act=act) def forward(self, x): """ Apply Ghost Convolution to input tensor. Args: x (torch.Tensor): Input tensor. Returns: (torch.Tensor): Output tensor with concatenated features. """ y = self.cv1(x) return torch.cat((y, self.cv2(y)), 1)

class RepConv(nn.Module):

"""

RepConv module with training and deploy modes.

textThis module is used in RT-DETR and can fuse convolutions during inference for efficiency. Attributes: conv1 (Conv): 3x3 convolution. conv2 (Conv): 1x1 convolution. bn (nn.BatchNorm2d, optional): Batch normalization for identity branch. act (nn.Module): Activation function. default_act (nn.Module): Default activation function (SiLU). References: https://github.com/DingXiaoH/RepVGG/blob/main/repvgg.py """ default_act = nn.SiLU() # default activation def __init__(self, c1, c2, k=3, s=1, p=1, g=1, d=1, act=True, bn=False, deploy=False): """ Initialize RepConv module with given parameters. Args: c1 (int): Number of input channels. c2 (int): Number of output channels. k (int): Kernel size. s (int): Stride. p (int): Padding. g (int): Groups. d (int): Dilation. act (bool | nn.Module): Activation function. bn (bool): Use batch normalization for identity branch. deploy (bool): Deploy mode for inference. """ super().__init__() assert k == 3 and p == 1 self.g = g self.c1 = c1 self.c2 = c2 self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity() self.bn = nn.BatchNorm2d(num_features=c1) if bn and c2 == c1 and s == 1 else None self.conv1 = Conv(c1, c2, k, s, p=p, g=g, act=False) self.conv2 = Conv(c1, c2, 1, s, p=(p - k // 2), g=g, act=False) def forward_fuse(self, x): """ Forward pass for deploy mode. Args: x (torch.Tensor): Input tensor. Returns: (torch.Tensor): Output tensor. """ return self.act(self.conv(x)) def forward(self, x): """ Forward pass for training mode. Args: x (torch.Tensor): Input tensor. Returns: (torch.Tensor): Output tensor. """ id_out = 0 if self.bn is None else self.bn(x) return self.act(self.conv1(x) + self.conv2(x) + id_out) def get_equivalent_kernel_bias(self): """ Calculate equivalent kernel and bias by fusing convolutions. Returns: (torch.Tensor): Equivalent kernel (torch.Tensor): Equivalent bias """ kernel3x3, bias3x3 = self._fuse_bn_tensor(self.conv1) kernel1x1, bias1x1 = self._fuse_bn_tensor(self.conv2) kernelid, biasid = self._fuse_bn_tensor(self.bn) return kernel3x3 + self._pad_1x1_to_3x3_tensor(kernel1x1) + kernelid, bias3x3 + bias1x1 + biasid @staticmethod def _pad_1x1_to_3x3_tensor(kernel1x1): """ Pad a 1x1 kernel to 3x3 size. Args: kernel1x1 (torch.Tensor): 1x1 convolution kernel. Returns: (torch.Tensor): Padded 3x3 kernel. """ if kernel1x1 is None: return 0 else: return torch.nn.functional.pad(kernel1x1, [1, 1, 1, 1]) def _fuse_bn_tensor(self, branch): """ Fuse batch normalization with convolution weights. Args: branch (Conv | nn.BatchNorm2d | None): Branch to fuse. Returns: (torch.Tensor): Fused kernel (torch.Tensor): Fused bias """ if branch is None: return 0, 0 if isinstance(branch, Conv): kernel = branch.conv.weight running_mean = branch.bn.running_mean running_var = branch.bn.running_var gamma = branch.bn.weight beta = branch.bn.bias eps = branch.bn.eps elif isinstance(branch, nn.BatchNorm2d): if not hasattr(self, "id_tensor"): input_dim = self.c1 // self.g kernel_value = np.zeros((self.c1, input_dim, 3, 3), dtype=np.float32) for i in range(self.c1): kernel_value[i, i % input_dim, 1, 1] = 1 self.id_tensor = torch.from_numpy(kernel_value).to(branch.weight.device) kernel = self.id_tensor running_mean = branch.running_mean running_var = branch.running_var gamma = branch.weight beta = branch.bias eps = branch.eps std = (running_var + eps).sqrt() t = (gamma / std).reshape(-1, 1, 1, 1) return kernel * t, beta - running_mean * gamma / std def fuse_convs(self): """Fuse convolutions for inference by creating a single equivalent convolution.""" if hasattr(self, "conv"): return kernel, bias = self.get_equivalent_kernel_bias() self.conv = nn.Conv2d( in_channels=self.conv1.conv.in_channels, out_channels=self.conv1.conv.out_channels, kernel_size=self.conv1.conv.kernel_size, stride=self.conv1.conv.stride, padding=self.conv1.conv.padding, dilation=self.conv1.conv.dilation, groups=self.conv1.conv.groups, bias=True, ).requires_grad_(False) self.conv.weight.data = kernel self.conv.bias.data = bias for para in self.parameters(): para.detach_() self.__delattr__("conv1") self.__delattr__("conv2") if hasattr(self, "nm"): self.__delattr__("nm") if hasattr(self, "bn"): self.__delattr__("bn") if hasattr(self, "id_tensor"): self.__delattr__("id_tensor")

class ChannelAttention(nn.Module):

"""

Channel-attention module for feature recalibration.

textApplies attention weights to channels based on global average pooling. Attributes: pool (nn.AdaptiveAvgPool2d): Global average pooling. fc (nn.Conv2d): Fully connected layer implemented as 1x1 convolution. act (nn.Sigmoid): Sigmoid activation for attention weights. References: https://github.com/open-mmlab/mmdetection/tree/v3.0.0rc1/configs/rtmdet """ def __init__(self, channels: int) -> None: """ Initialize Channel-attention module. Args: channels (int): Number of input channels. """ super().__init__() self.pool = nn.AdaptiveAvgPool2d(1) self.fc = nn.Conv2d(channels, channels, 1, 1, 0, bias=True) self.act = nn.Sigmoid() def forward(self, x: torch.Tensor) -> torch.Tensor: """ Apply channel attention to input tensor. Args: x (torch.Tensor): Input tensor. Returns: (torch.Tensor): Channel-attended output tensor. """ return x * self.act(self.fc(self.pool(x)))

class SpatialAttention(nn.Module):

"""

Spatial-attention module for feature recalibration.

textApplies attention weights to spatial dimensions based on channel statistics. Attributes: cv1 (nn.Conv2d): Convolution layer for spatial attention. act (nn.Sigmoid): Sigmoid activation for attention weights. """ def __init__(self, kernel_size=7): """ Initialize Spatial-attention module. Args: kernel_size (int): Size of the convolutional kernel (3 or 7). """ super().__init__() assert kernel_size in {3, 7}, "kernel size must be 3 or 7" padding = 3 if kernel_size == 7 else 1 self.cv1 = nn.Conv2d(2, 1, kernel_size, padding=padding, bias=False) self.act = nn.Sigmoid() def forward(self, x): """ Apply spatial attention to input tensor. Args: x (torch.Tensor): Input tensor. Returns: (torch.Tensor): Spatial-attended output tensor. """ return x * self.act(self.cv1(torch.cat([torch.mean(x, 1, keepdim=True), torch.max(x, 1, keepdim=True)[0]], 1)))

class CBAM(nn.Module):

"""

Convolutional Block Attention Module.

textCombines channel and spatial attention mechanisms for comprehensive feature refinement. Attributes: channel_attention (ChannelAttention): Channel attention module. spatial_attention (SpatialAttention): Spatial attention module. """ def __init__(self, c1, kernel_size=7): """ Initialize CBAM with given parameters. Args: c1 (int): Number of input channels. kernel_size (int): Size of the convolutional kernel for spatial attention. """ super().__init__() self.channel_attention = ChannelAttention(c1) self.spatial_attention = SpatialAttention(kernel_size) def forward(self, x): """ Apply channel and spatial attention sequentially to input tensor. Args: x (torch.Tensor): Input tensor. Returns: (torch.Tensor): Attended output tensor. """ return self.spatial_attention(self.channel_attention(x))

class Concat(nn.Module):

"""

Concatenate a list of tensors along specified dimension.

textAttributes: d (int): Dimension along which to concatenate tensors. """ def __init__(self, dimension=1): """ Initialize Concat module. Args: dimension (int): Dimension along which to concatenate tensors. """ super().__init__() self.d = dimension def forward(self, x): """ Concatenate input tensors along specified dimension. Args: x (List[torch.Tensor]): List of input tensors. Returns: (torch.Tensor): Concatenated tensor. """ return torch.cat(x, self.d)

class Index(nn.Module):

"""

Returns a particular index of the input.

textAttributes: index (int): Index to select from input. """ def __init__(self, index=0): """ Initialize Index module. Args: index (int): Index to select from input. """ super().__init__() self.index = index def forward(self, x): """ Select and return a particular index from input. Args: x (List[torch.Tensor]): List of input tensors. Returns: (torch.Tensor): Selected tensor. """ return x[self.index]

我现在的task.py文件如下:# Ultralytics AGPL-3.0 License - https://ultralytics.com/license

import contextlib

import pickle

import re

import types

from copy import deepcopy

from pathlib import Path

import torch

import torch.nn as nn

from ultralytics.nn.autobackend import check_class_names

from ultralytics.nn.modules import (

AIFI,

C1,

C2,

C2PSA,

C3,

C3TR,

ELAN1,

OBB,

PSA,

SPP,

SPPELAN,

SPPF,

A2C2f,

AConv,

ADown,

Bottleneck,

BottleneckCSP,

C2f,

C2fAttn,

C2fCIB,

C2fPSA,

C3Ghost,

C3k2,

C3x,

CBFuse,

CBLinear,

Classify,

Concat,

Conv,

Conv2,

ConvTranspose,

Detect,

DWConv,

DWConvTranspose2d,

Focus,

CBAM,

GhostBottleneck,

GhostConv,

HGBlock,

HGStem,

ImagePoolingAttn,

Index,

LRPCHead,

Pose,

RepC3,

RepConv,

RepNCSPELAN4,

RepVGGDW,

ResNetLayer,

RTDETRDecoder,

SCDown,

Segment,

TorchVision,

WorldDetect,

YOLOEDetect,

YOLOESegment,

v10Detect,

)

from ultralytics.utils import DEFAULT_CFG_DICT, DEFAULT_CFG_KEYS, LOGGER, YAML, colorstr, emojis

from ultralytics.utils.checks import check_requirements, check_suffix, check_yaml

from ultralytics.utils.loss import (

E2EDetectLoss,

v8ClassificationLoss,

v8DetectionLoss,

v8OBBLoss,

v8PoseLoss,

v8SegmentationLoss,

)

from ultralytics.utils.ops import make_divisible

from ultralytics.utils.plotting import feature_visualization

from ultralytics.utils.torch_utils import (

fuse_conv_and_bn,

fuse_deconv_and_bn,

initialize_weights,

intersect_dicts,

model_info,

scale_img,

smart_inference_mode,

time_sync,

)

class BaseModel(torch.nn.Module):

"""The BaseModel class serves as a base class for all the models in the Ultralytics YOLO family."""

textdef forward(self, x, *args, **kwargs): """ Perform forward pass of the model for either training or inference. If x is a dict, calculates and returns the loss for training. Otherwise, returns predictions for inference. Args: x (torch.Tensor | dict): Input tensor for inference, or dict with image tensor and labels for training. *args (Any): Variable length argument list. **kwargs (Any): Arbitrary keyword arguments. Returns: (torch.Tensor): Loss if x is a dict (training), or network predictions (inference). """ if isinstance(x, dict): # for cases of training and validating while training. return self.loss(x, *args, **kwargs) return self.predict(x, *args, **kwargs) def predict(self, x, profile=False, visualize=False, augment=False, embed=None): """ Perform a forward pass through the network. Args: x (torch.Tensor): The input tensor to the model. profile (bool): Print the computation time of each layer if True. visualize (bool): Save the feature maps of the model if True. augment (bool): Augment image during prediction. embed (list, optional): A list of feature vectors/embeddings to return. Returns: (torch.Tensor): The last output of the model. """ if augment: return self._predict_augment(x) return self._predict_once(x, profile, visualize, embed) def _predict_once(self, x, profile=False, visualize=False, embed=None): """ Perform a forward pass through the network. Args: x (torch.Tensor): The input tensor to the model. profile (bool): Print the computation time of each layer if True. visualize (bool): Save the feature maps of the model if True. embed (list, optional): A list of feature vectors/embeddings to return. Returns: (torch.Tensor): The last output of the model. """ y, dt, embeddings = [], [], [] # outputs for m in self.model: if m.f != -1: # if not from previous layer x = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layers if profile: self._profile_one_layer(m, x, dt) x = m(x) # run y.append(x if m.i in self.save else None) # save output if visualize: feature_visualization(x, m.type, m.i, save_dir=visualize) if embed and m.i in embed: embeddings.append(torch.nn.functional.adaptive_avg_pool2d(x, (1, 1)).squeeze(-1).squeeze(-1)) # flatten if m.i == max(embed): return torch.unbind(torch.cat(embeddings, 1), dim=0) return x def _predict_augment(self, x): """Perform augmentations on input image x and return augmented inference.""" LOGGER.warning( f"{self.__class__.__name__} does not support 'augment=True' prediction. " f"Reverting to single-scale prediction." ) return self._predict_once(x) def _profile_one_layer(self, m, x, dt): """ Profile the computation time and FLOPs of a single layer of the model on a given input. Args: m (torch.nn.Module): The layer to be profiled. x (torch.Tensor): The input data to the layer. dt (list): A list to store the computation time of the layer. """ try: import thop except ImportError: thop = None # conda support without 'ultralytics-thop' installed c = m == self.model[-1] and isinstance(x, list) # is final layer list, copy input as inplace fix flops = thop.profile(m, inputs=[x.copy() if c else x], verbose=False)[0] / 1e9 * 2 if thop else 0 # GFLOPs t = time_sync() for _ in range(10): m(x.copy() if c else x) dt.append((time_sync() - t) * 100) if m == self.model[0]: LOGGER.info(f"{'time (ms)':>10s} {'GFLOPs':>10s} {'params':>10s} module") LOGGER.info(f"{dt[-1]:10.2f} {flops:10.2f} {m.np:10.0f} {m.type}") if c: LOGGER.info(f"{sum(dt):10.2f} {'-':>10s} {'-':>10s} Total") def fuse(self, verbose=True): """ Fuse the `Conv2d()` and `BatchNorm2d()` layers of the model into a single layer for improved computation efficiency. Returns: (torch.nn.Module): The fused model is returned. """ if not self.is_fused(): for m in self.model.modules(): if isinstance(m, (Conv, Conv2, DWConv)) and hasattr(m, "bn"): if isinstance(m, Conv2): m.fuse_convs() m.conv = fuse_conv_and_bn(m.conv, m.bn) # update conv delattr(m, "bn") # remove batchnorm m.forward = m.forward_fuse # update forward if isinstance(m, ConvTranspose) and hasattr(m, "bn"): m.conv_transpose = fuse_deconv_and_bn(m.conv_transpose, m.bn) delattr(m, "bn") # remove batchnorm m.forward = m.forward_fuse # update forward if isinstance(m, RepConv): m.fuse_convs() m.forward = m.forward_fuse # update forward if isinstance(m, RepVGGDW): m.fuse() m.forward = m.forward_fuse if isinstance(m, v10Detect): m.fuse() # remove one2many head self.info(verbose=verbose) return self def is_fused(self, thresh=10): """ Check if the model has less than a certain threshold of BatchNorm layers. Args: thresh (int, optional): The threshold number of BatchNorm layers. Returns: (bool): True if the number of BatchNorm layers in the model is less than the threshold, False otherwise. """ bn = tuple(v for k, v in torch.nn.__dict__.items() if "Norm" in k) # normalization layers, i.e. BatchNorm2d() return sum(isinstance(v, bn) for v in self.modules()) < thresh # True if < 'thresh' BatchNorm layers in model def info(self, detailed=False, verbose=True, imgsz=640): """ Print model information. Args: detailed (bool): If True, prints out detailed information about the model. verbose (bool): If True, prints out the model information. imgsz (int): The size of the image that the model will be trained on. """ return model_info(self, detailed=detailed, verbose=verbose, imgsz=imgsz) def _apply(self, fn): """ Apply a function to all tensors in the model that are not parameters or registered buffers. Args: fn (function): The function to apply to the model. Returns: (BaseModel): An updated BaseModel object. """ self = super()._apply(fn) m = self.model[-1] # Detect() if isinstance( m, Detect ): # includes all Detect subclasses like Segment, Pose, OBB, WorldDetect, YOLOEDetect, YOLOESegment m.stride = fn(m.stride) m.anchors = fn(m.anchors) m.strides = fn(m.strides) return self def load(self, weights, verbose=True): """ Load weights into the model. Args: weights (dict | torch.nn.Module): The pre-trained weights to be loaded. verbose (bool, optional): Whether to log the transfer progress. """ model = weights["model"] if isinstance(weights, dict) else weights # torchvision models are not dicts csd = model.float().state_dict() # checkpoint state_dict as FP32 csd = intersect_dicts(csd, self.state_dict()) # intersect self.load_state_dict(csd, strict=False) # load if verbose: LOGGER.info(f"Transferred {len(csd)}/{len(self.model.state_dict())} items from pretrained weights") def loss(self, batch, preds=None): """ Compute loss. Args: batch (dict): Batch to compute loss on. preds (torch.Tensor | List[torch.Tensor], optional): Predictions. """ if getattr(self, "criterion", None) is None: self.criterion = self.init_criterion() preds = self.forward(batch["img"]) if preds is None else preds return self.criterion(preds, batch) def init_criterion(self): """Initialize the loss criterion for the BaseModel.""" raise NotImplementedError("compute_loss() needs to be implemented by task heads")

class DetectionModel(BaseModel):

"""YOLO detection model."""

textdef __init__(self, cfg="yolo11n.yaml", ch=3, nc=None, verbose=True): """ Initialize the YOLO detection model with the given config and parameters. Args: cfg (str | dict): Model configuration file path or dictionary. ch (int): Number of input channels. nc (int, optional): Number of classes. verbose (bool): Whether to display model information. """ super().__init__() self.yaml = cfg if isinstance(cfg, dict) else yaml_model_load(cfg) # cfg dict if self.yaml["backbone"][0][2] == "Silence": LOGGER.warning( "YOLOv9 `Silence` module is deprecated in favor of torch.nn.Identity. " "Please delete local *.pt file and re-download the latest model checkpoint." ) self.yaml["backbone"][0][2] = "nn.Identity" # Define model self.yaml["channels"] = ch # save channels if nc and nc != self.yaml["nc"]: LOGGER.info(f"Overriding model.yaml nc={self.yaml['nc']} with nc={nc}") self.yaml["nc"] = nc # override YAML value self.model, self.save = parse_model(deepcopy(self.yaml), ch=ch, verbose=verbose) # model, savelist self.names = {i: f"{i}" for i in range(self.yaml["nc"])} # default names dict self.inplace = self.yaml.get("inplace", True) self.end2end = getattr(self.model[-1], "end2end", False) # Build strides m = self.model[-1] # Detect() if isinstance(m, Detect): # includes all Detect subclasses like Segment, Pose, OBB, YOLOEDetect, YOLOESegment s = 256 # 2x min stride m.inplace = self.inplace def _forward(x): """Perform a forward pass through the model, handling different Detect subclass types accordingly.""" if self.end2end: return self.forward(x)["one2many"] return self.forward(x)[0] if isinstance(m, (Segment, YOLOESegment, Pose, OBB)) else self.forward(x) m.stride = torch.tensor([s / x.shape[-2] for x in _forward(torch.zeros(1, ch, s, s))]) # forward self.stride = m.stride m.bias_init() # only run once else: self.stride = torch.Tensor([32]) # default stride for i.e. RTDETR # Init weights, biases initialize_weights(self) if verbose: self.info() LOGGER.info("") def _predict_augment(self, x): """ Perform augmentations on input image x and return augmented inference and train outputs. Args: x (torch.Tensor): Input image tensor. Returns: (torch.Tensor): Augmented inference output. """ if getattr(self, "end2end", False) or self.__class__.__name__ != "DetectionModel": LOGGER.warning("Model does not support 'augment=True', reverting to single-scale prediction.") return self._predict_once(x) img_size = x.shape[-2:] # height, width s = [1, 0.83, 0.67] # scales f = [None, 3, None] # flips (2-ud, 3-lr) y = [] # outputs for si, fi in zip(s, f): xi = scale_img(x.flip(fi) if fi else x, si, gs=int(self.stride.max())) yi = super().predict(xi)[0] # forward yi = self._descale_pred(yi, fi, si, img_size) y.append(yi) y = self._clip_augmented(y) # clip augmented tails return torch.cat(y, -1), None # augmented inference, train @staticmethod def _descale_pred(p, flips, scale, img_size, dim=1): """ De-scale predictions following augmented inference (inverse operation). Args: p (torch.Tensor): Predictions tensor. flips (int): Flip type (0=none, 2=ud, 3=lr). scale (float): Scale factor. img_size (tuple): Original image size (height, width). dim (int): Dimension to split at. Returns: (torch.Tensor): De-scaled predictions. """ p[:, :4] /= scale # de-scale x, y, wh, cls = p.split((1, 1, 2, p.shape[dim] - 4), dim) if flips == 2: y = img_size[0] - y # de-flip ud elif flips == 3: x = img_size[1] - x # de-flip lr return torch.cat((x, y, wh, cls), dim) def _clip_augmented(self, y): """ Clip YOLO augmented inference tails. Args: y (List[torch.Tensor]): List of detection tensors. Returns: (List[torch.Tensor]): Clipped detection tensors. """ nl = self.model[-1].nl # number of detection layers (P3-P5) g = sum(4**x for x in range(nl)) # grid points e = 1 # exclude layer count i = (y[0].shape[-1] // g) * sum(4**x for x in range(e)) # indices y[0] = y[0][..., :-i] # large i = (y[-1].shape[-1] // g) * sum(4 ** (nl - 1 - x) for x in range(e)) # indices y[-1] = y[-1][..., i:] # small return y def init_criterion(self): """Initialize the loss criterion for the DetectionModel.""" return E2EDetectLoss(self) if getattr(self, "end2end", False) else v8DetectionLoss(self)

class OBBModel(DetectionModel):

"""YOLO Oriented Bounding Box (OBB) model."""

textdef __init__(self, cfg="yolo11n-obb.yaml", ch=3, nc=None, verbose=True): """ Initialize YOLO OBB model with given config and parameters. Args: cfg (str | dict): Model configuration file path or dictionary. ch (int): Number of input channels. nc (int, optional): Number of classes. verbose (bool): Whether to display model information. """ super().__init__(cfg=cfg, ch=ch, nc=nc, verbose=verbose) def init_criterion(self): """Initialize the loss criterion for the model.""" return v8OBBLoss(self)

class SegmentationModel(DetectionModel):

"""YOLO segmentation model."""

textdef __init__(self, cfg="yolo11n-seg.yaml", ch=3, nc=None, verbose=True): """ Initialize YOLOv8 segmentation model with given config and parameters. Args: cfg (str | dict): Model configuration file path or dictionary. ch (int): Number of input channels. nc (int, optional): Number of classes. verbose (bool): Whether to display model information. """ super().__init__(cfg=cfg, ch=ch, nc=nc, verbose=verbose) def init_criterion(self): """Initialize the loss criterion for the SegmentationModel.""" return v8SegmentationLoss(self)

class PoseModel(DetectionModel):

"""YOLO pose model."""

textdef __init__(self, cfg="yolo11n-pose.yaml", ch=3, nc=None, data_kpt_shape=(None, None), verbose=True): """ Initialize YOLOv8 Pose model. Args: cfg (str | dict): Model configuration file path or dictionary. ch (int): Number of input channels. nc (int, optional): Number of classes. data_kpt_shape (tuple): Shape of keypoints data. verbose (bool): Whether to display model information. """ if not isinstance(cfg, dict): cfg = yaml_model_load(cfg) # load model YAML if any(data_kpt_shape) and list(data_kpt_shape) != list(cfg["kpt_shape"]): LOGGER.info(f"Overriding model.yaml kpt_shape={cfg['kpt_shape']} with kpt_shape={data_kpt_shape}") cfg["kpt_shape"] = data_kpt_shape super().__init__(cfg=cfg, ch=ch, nc=nc, verbose=verbose) def init_criterion(self): """Initialize the loss criterion for the PoseModel.""" return v8PoseLoss(self)

class ClassificationModel(BaseModel):

"""YOLO classification model."""

textdef __init__(self, cfg="yolo11n-cls.yaml", ch=3, nc=None, verbose=True): """ Initialize ClassificationModel with YAML, channels, number of classes, verbose flag. Args: cfg (str | dict): Model configuration file path or dictionary. ch (int): Number of input channels. nc (int, optional): Number of classes. verbose (bool): Whether to display model information. """ super().__init__() self._from_yaml(cfg, ch, nc, verbose) def _from_yaml(self, cfg, ch, nc, verbose): """ Set YOLOv8 model configurations and define the model architecture. Args: cfg (str | dict): Model configuration file path or dictionary. ch (int): Number of input channels. nc (int, optional): Number of classes. verbose (bool): Whether to display model information. """ self.yaml = cfg if isinstance(cfg, dict) else yaml_model_load(cfg) # cfg dict # Define model ch = self.yaml["channels"] = self.yaml.get("channels", ch) # input channels if nc and nc != self.yaml["nc"]: LOGGER.info(f"Overriding model.yaml nc={self.yaml['nc']} with nc={nc}") self.yaml["nc"] = nc # override YAML value elif not nc and not self.yaml.get("nc", None): raise ValueError("nc not specified. Must specify nc in model.yaml or function arguments.") self.model, self.save = parse_model(deepcopy(self.yaml), ch=ch, verbose=verbose) # model, savelist self.stride = torch.Tensor([1]) # no stride constraints self.names = {i: f"{i}" for i in range(self.yaml["nc"])} # default names dict self.info() @staticmethod def reshape_outputs(model, nc): """ Update a TorchVision classification model to class count 'n' if required. Args: model (torch.nn.Module): Model to update. nc (int): New number of classes. """ name, m = list((model.model if hasattr(model, "model") else model).named_children())[-1] # last module if isinstance(m, Classify): # YOLO Classify() head if m.linear.out_features != nc: m.linear = torch.nn.Linear(m.linear.in_features, nc) elif isinstance(m, torch.nn.Linear): # ResNet, EfficientNet if m.out_features != nc: setattr(model, name, torch.nn.Linear(m.in_features, nc)) elif isinstance(m, torch.nn.Sequential): types = [type(x) for x in m] if torch.nn.Linear in types: i = len(types) - 1 - types[::-1].index(torch.nn.Linear) # last torch.nn.Linear index if m[i].out_features != nc: m[i] = torch.nn.Linear(m[i].in_features, nc) elif torch.nn.Conv2d in types: i = len(types) - 1 - types[::-1].index(torch.nn.Conv2d) # last torch.nn.Conv2d index if m[i].out_channels != nc: m[i] = torch.nn.Conv2d( m[i].in_channels, nc, m[i].kernel_size, m[i].stride, bias=m[i].bias is not None ) def init_criterion(self): """Initialize the loss criterion for the ClassificationModel.""" return v8ClassificationLoss()

class RTDETRDetectionModel(DetectionModel):

"""

RTDETR (Real-time DEtection and Tracking using Transformers) Detection Model class.

textThis class is responsible for constructing the RTDETR architecture, defining loss functions, and facilitating both the training and inference processes. RTDETR is an object detection and tracking model that extends from the DetectionModel base class. Methods: init_criterion: Initializes the criterion used for loss calculation. loss: Computes and returns the loss during training. predict: Performs a forward pass through the network and returns the output. """ def __init__(self, cfg="rtdetr-l.yaml", ch=3, nc=None, verbose=True): """ Initialize the RTDETRDetectionModel. Args: cfg (str | dict): Configuration file name or path. ch (int): Number of input channels. nc (int, optional): Number of classes. verbose (bool): Print additional information during initialization. """ super().__init__(cfg=cfg, ch=ch, nc=nc, verbose=verbose) def init_criterion(self): """Initialize the loss criterion for the RTDETRDetectionModel.""" from ultralytics.models.utils.loss import RTDETRDetectionLoss return RTDETRDetectionLoss(nc=self.nc, use_vfl=True) def loss(self, batch, preds=None): """ Compute the loss for the given batch of data. Args: batch (dict): Dictionary containing image and label data. preds (torch.Tensor, optional): Precomputed model predictions. Returns: (tuple): A tuple containing the total loss and main three losses in a tensor. """ if not hasattr(self, "criterion"): self.criterion = self.init_criterion() img = batch["img"] # NOTE: preprocess gt_bbox and gt_labels to list. bs = len(img) batch_idx = batch["batch_idx"] gt_groups = [(batch_idx == i).sum().item() for i in range(bs)] targets = { "cls": batch["cls"].to(img.device, dtype=torch.long).view(-1), "bboxes": batch["bboxes"].to(device=img.device), "batch_idx": batch_idx.to(img.device, dtype=torch.long).view(-1), "gt_groups": gt_groups, } preds = self.predict(img, batch=targets) if preds is None else preds dec_bboxes, dec_scores, enc_bboxes, enc_scores, dn_meta = preds if self.training else preds[1] if dn_meta is None: dn_bboxes, dn_scores = None, None else: dn_bboxes, dec_bboxes = torch.split(dec_bboxes, dn_meta["dn_num_split"], dim=2) dn_scores, dec_scores = torch.split(dec_scores, dn_meta["dn_num_split"], dim=2) dec_bboxes = torch.cat([enc_bboxes.unsqueeze(0), dec_bboxes]) # (7, bs, 300, 4) dec_scores = torch.cat([enc_scores.unsqueeze(0), dec_scores]) loss = self.criterion( (dec_bboxes, dec_scores), targets, dn_bboxes=dn_bboxes, dn_scores=dn_scores, dn_meta=dn_meta ) # NOTE: There are like 12 losses in RTDETR, backward with all losses but only show the main three losses. return sum(loss.values()), torch.as_tensor( [loss[k].detach() for k in ["loss_giou", "loss_class", "loss_bbox"]], device=img.device ) def predict(self, x, profile=False, visualize=False, batch=None, augment=False, embed=None): """ Perform a forward pass through the model. Args: x (torch.Tensor): The input tensor. profile (bool): If True, profile the computation time for each layer. visualize (bool): If True, save feature maps for visualization. batch (dict, optional): Ground truth data for evaluation. augment (bool): If True, perform data augmentation during inference. embed (list, optional): A list of feature vectors/embeddings to return. Returns: (torch.Tensor): Model's output tensor. """ y, dt, embeddings = [], [], [] # outputs for m in self.model[:-1]: # except the head part if m.f != -1: # if not from previous layer x = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layers if profile: self._profile_one_layer(m, x, dt) x = m(x) # run y.append(x if m.i in self.save else None) # save output if visualize: feature_visualization(x, m.type, m.i, save_dir=visualize) if embed and m.i in embed: embeddings.append(torch.nn.functional.adaptive_avg_pool2d(x, (1, 1)).squeeze(-1).squeeze(-1)) # flatten if m.i == max(embed): return torch.unbind(torch.cat(embeddings, 1), dim=0) head = self.model[-1] x = head([y[j] for j in head.f], batch) # head inference return x

class WorldModel(DetectionModel):

"""YOLOv8 World Model."""

textdef __init__(self, cfg="yolov8s-world.yaml", ch=3, nc=None, verbose=True): """ Initialize YOLOv8 world model with given config and parameters. Args: cfg (str | dict): Model configuration file path or dictionary. ch (int): Number of input channels. nc (int, optional): Number of classes. verbose (bool): Whether to display model information. """ self.txt_feats = torch.randn(1, nc or 80, 512) # features placeholder self.clip_model = None # CLIP model placeholder super().__init__(cfg=cfg, ch=ch, nc=nc, verbose=verbose) def set_classes(self, text, batch=80, cache_clip_model=True): """ Set classes in advance so that model could do offline-inference without clip model. Args: text (List[str]): List of class names. batch (int): Batch size for processing text tokens. cache_clip_model (bool): Whether to cache the CLIP model. """ try: import clip except ImportError: check_requirements("git+https://github.com/ultralytics/CLIP.git") import clip if ( not getattr(self, "clip_model", None) and cache_clip_model ): # for backwards compatibility of models lacking clip_model attribute self.clip_model = clip.load("ViT-B/32")[0] model = self.clip_model if cache_clip_model else clip.load("ViT-B/32")[0] device = next(model.parameters()).device text_token = clip.tokenize(text).to(device) txt_feats = [model.encode_text(token).detach() for token in text_token.split(batch)] txt_feats = txt_feats[0] if len(txt_feats) == 1 else torch.cat(txt_feats, dim=0) txt_feats = txt_feats / txt_feats.norm(p=2, dim=-1, keepdim=True) self.txt_feats = txt_feats.reshape(-1, len(text), txt_feats.shape[-1]) self.model[-1].nc = len(text) def predict(self, x, profile=False, visualize=False, txt_feats=None, augment=False, embed=None): """ Perform a forward pass through the model. Args: x (torch.Tensor): The input tensor. profile (bool): If True, profile the computation time for each layer. visualize (bool): If True, save feature maps for visualization. txt_feats (torch.Tensor, optional): The text features, use it if it's given. augment (bool): If True, perform data augmentation during inference. embed (list, optional): A list of feature vectors/embeddings to return. Returns: (torch.Tensor): Model's output tensor. """ txt_feats = (self.txt_feats if txt_feats is None else txt_feats).to(device=x.device, dtype=x.dtype) if len(txt_feats) != len(x) or self.model[-1].export: txt_feats = txt_feats.expand(x.shape[0], -1, -1) ori_txt_feats = txt_feats.clone() y, dt, embeddings = [], [], [] # outputs for m in self.model: # except the head part if m.f != -1: # if not from previous layer x = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layers if profile: self._profile_one_layer(m, x, dt) if isinstance(m, C2fAttn): x = m(x, txt_feats) elif isinstance(m, WorldDetect): x = m(x, ori_txt_feats) elif isinstance(m, ImagePoolingAttn): txt_feats = m(x, txt_feats) else: x = m(x) # run y.append(x if m.i in self.save else None) # save output if visualize: feature_visualization(x, m.type, m.i, save_dir=visualize) if embed and m.i in embed: embeddings.append(torch.nn.functional.adaptive_avg_pool2d(x, (1, 1)).squeeze(-1).squeeze(-1)) # flatten if m.i == max(embed): return torch.unbind(torch.cat(embeddings, 1), dim=0) return x def loss(self, batch, preds=None): """ Compute loss. Args: batch (dict): Batch to compute loss on. preds (torch.Tensor | List[torch.Tensor], optional): Predictions. """ if not hasattr(self, "criterion"): self.criterion = self.init_criterion() if preds is None: preds = self.forward(batch["img"], txt_feats=batch["txt_feats"]) return self.criterion(preds, batch)

class YOLOEModel(DetectionModel):

"""YOLOE detection model."""

textdef __init__(self, cfg="yoloe-v8s.yaml", ch=3, nc=None, verbose=True): """ Initialize YOLOE model with given config and parameters. Args: cfg (str | dict): Model configuration file path or dictionary. ch (int): Number of input channels. nc (int, optional): Number of classes. verbose (bool): Whether to display model information. """ super().__init__(cfg=cfg, ch=ch, nc=nc, verbose=verbose) @smart_inference_mode() def get_text_pe(self, text, batch=80, cache_clip_model=False, without_reprta=False): """ Set classes in advance so that model could do offline-inference without clip model. Args: text (List[str]): List of class names. batch (int): Batch size for processing text tokens. cache_clip_model (bool): Whether to cache the CLIP model. without_reprta (bool): Whether to return text embeddings cooperated with reprta module. Returns: (torch.Tensor): Text positional embeddings. """ from ultralytics.nn.text_model import build_text_model device = next(self.model.parameters()).device if not getattr(self, "clip_model", None) and cache_clip_model: # For backwards compatibility of models lacking clip_model attribute self.clip_model = build_text_model("mobileclip:blt", device=device) model = self.clip_model if cache_clip_model else build_text_model("mobileclip:blt", device=device) text_token = model.tokenize(text) txt_feats = [model.encode_text(token).detach() for token in text_token.split(batch)] txt_feats = txt_feats[0] if len(txt_feats) == 1 else torch.cat(txt_feats, dim=0) txt_feats = txt_feats.reshape(-1, len(text), txt_feats.shape[-1]) if without_reprta: return txt_feats assert not self.training head = self.model[-1] assert isinstance(head, YOLOEDetect) return head.get_tpe(txt_feats) # run axuiliary text head @smart_inference_mode() def get_visual_pe(self, img, visual): """ Get visual embeddings. Args: img (torch.Tensor): Input image tensor. visual (torch.Tensor): Visual features. Returns: (torch.Tensor): Visual positional embeddings. """ return self(img, vpe=visual, return_vpe=True) def set_vocab(self, vocab, names): """ Set vocabulary for the prompt-free model. Args: vocab (nn.ModuleList): List of vocabulary items. names (List[str]): List of class names. """ assert not self.training head = self.model[-1] assert isinstance(head, YOLOEDetect) # Cache anchors for head device = next(self.parameters()).device self(torch.empty(1, 3, self.args["imgsz"], self.args["imgsz"]).to(device)) # warmup # re-parameterization for prompt-free model self.model[-1].lrpc = nn.ModuleList( LRPCHead(cls, pf[-1], loc[-1], enabled=i != 2) for i, (cls, pf, loc) in enumerate(zip(vocab, head.cv3, head.cv2)) ) for loc_head, cls_head in zip(head.cv2, head.cv3): assert isinstance(loc_head, nn.Sequential) assert isinstance(cls_head, nn.Sequential) del loc_head[-1] del cls_head[-1] self.model[-1].nc = len(names) self.names = check_class_names(names) def get_vocab(self, names): """ Get fused vocabulary layer from the model. Args: names (list): List of class names. Returns: (nn.ModuleList): List of vocabulary modules. """ assert not self.training head = self.model[-1] assert isinstance(head, YOLOEDetect) assert not head.is_fused tpe = self.get_text_pe(names) self.set_classes(names, tpe) device = next(self.model.parameters()).device head.fuse(self.pe.to(device)) # fuse prompt embeddings to classify head vocab = nn.ModuleList() for cls_head in head.cv3: assert isinstance(cls_head, nn.Sequential) vocab.append(cls_head[-1]) return vocab def set_classes(self, names, embeddings): """ Set classes in advance so that model could do offline-inference without clip model. Args: names (List[str]): List of class names. embeddings (torch.Tensor): Embeddings tensor. """ assert not hasattr(self.model[-1], "lrpc"), ( "Prompt-free model does not support setting classes. Please try with Text/Visual prompt models." ) assert embeddings.ndim == 3 self.pe = embeddings self.model[-1].nc = len(names) self.names = check_class_names(names) def get_cls_pe(self, tpe, vpe): """ Get class positional embeddings. Args: tpe (torch.Tensor, optional): Text positional embeddings. vpe (torch.Tensor, optional): Visual positional embeddings. Returns: (torch.Tensor): Class positional embeddings. """ all_pe = [] if tpe is not None: assert tpe.ndim == 3 all_pe.append(tpe) if vpe is not None: assert vpe.ndim == 3 all_pe.append(vpe) if not all_pe: all_pe.append(getattr(self, "pe", torch.zeros(1, 80, 512))) return torch.cat(all_pe, dim=1) def predict( self, x, profile=False, visualize=False, tpe=None, augment=False, embed=None, vpe=None, return_vpe=False ): """ Perform a forward pass through the model. Args: x (torch.Tensor): The input tensor. profile (bool): If True, profile the computation time for each layer. visualize (bool): If True, save feature maps for visualization. tpe (torch.Tensor, optional): Text positional embeddings. augment (bool): If True, perform data augmentation during inference. embed (list, optional): A list of feature vectors/embeddings to return. vpe (torch.Tensor, optional): Visual positional embeddings. return_vpe (bool): If True, return visual positional embeddings. Returns: (torch.Tensor): Model's output tensor. """ y, dt, embeddings = [], [], [] # outputs b = x.shape[0] for m in self.model: # except the head part if m.f != -1: # if not from previous layer x = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layers if profile: self._profile_one_layer(m, x, dt) if isinstance(m, YOLOEDetect): vpe = m.get_vpe(x, vpe) if vpe is not None else None if return_vpe: assert vpe is not None assert not self.training return vpe cls_pe = self.get_cls_pe(m.get_tpe(tpe), vpe).to(device=x[0].device, dtype=x[0].dtype) if cls_pe.shape[0] != b or m.export: cls_pe = cls_pe.expand(b, -1, -1) x = m(x, cls_pe) else: x = m(x) # run y.append(x if m.i in self.save else None) # save output if visualize: feature_visualization(x, m.type, m.i, save_dir=visualize) if embed and m.i in embed: embeddings.append(torch.nn.functional.adaptive_avg_pool2d(x, (1, 1)).squeeze(-1).squeeze(-1)) # flatten if m.i == max(embed): return torch.unbind(torch.cat(embeddings, 1), dim=0) return x def loss(self, batch, preds=None): """ Compute loss. Args: batch (dict): Batch to compute loss on. preds (torch.Tensor | List[torch.Tensor], optional): Predictions. """ if not hasattr(self, "criterion"): from ultralytics.utils.loss import TVPDetectLoss visual_prompt = batch.get("visuals", None) is not None # TODO self.criterion = TVPDetectLoss(self) if visual_prompt else self.init_criterion() if preds is None: preds = self.forward(batch["img"], tpe=batch.get("txt_feats", None), vpe=batch.get("visuals", None)) return self.criterion(preds, batch)

class YOLOESegModel(YOLOEModel, SegmentationModel):

"""YOLOE segmentation model."""

textdef __init__(self, cfg="yoloe-v8s-seg.yaml", ch=3, nc=None, verbose=True): """ Initialize YOLOE segmentation model with given config and parameters. Args: cfg (str | dict): Model configuration file path or dictionary. ch (int): Number of input channels. nc (int, optional): Number of classes. verbose (bool): Whether to display model information. """ super().__init__(cfg=cfg, ch=ch, nc=nc, verbose=verbose) def loss(self, batch, preds=None): """ Compute loss. Args: batch (dict): Batch to compute loss on. preds (torch.Tensor | List[torch.Tensor], optional): Predictions. """ if not hasattr(self, "criterion"): from ultralytics.utils.loss import TVPSegmentLoss visual_prompt = batch.get("visuals", None) is not None # TODO self.criterion = TVPSegmentLoss(self) if visual_prompt else self.init_criterion() if preds is None: preds = self.forward(batch["img"], tpe=batch.get("txt_feats", None), vpe=batch.get("visuals", None)) return self.criterion(preds, batch)

class Ensemble(torch.nn.ModuleList):

"""Ensemble of models."""

textdef __init__(self): """Initialize an ensemble of models.""" super().__init__() def forward(self, x, augment=False, profile=False, visualize=False): """ Generate the YOLO network's final layer. Args: x (torch.Tensor): Input tensor. augment (bool): Whether to augment the input. profile (bool): Whether to profile the model. visualize (bool): Whether to visualize the features. Returns: (tuple): Tuple containing the concatenated predictions and None. """ y = [module(x, augment, profile, visualize)[0] for module in self] # y = torch.stack(y).max(0)[0] # max ensemble # y = torch.stack(y).mean(0) # mean ensemble y = torch.cat(y, 2) # nms ensemble, y shape(B, HW, C) return y, None # inference, train output

@contextlib.contextmanager

def temporary_modules(modules=None, attributes=None):

"""

Context manager for temporarily adding or modifying modules in Python's module cache (sys.modules).

textThis function can be used to change the module paths during runtime. It's useful when refactoring code, where you've moved a module from one location to another, but you still want to support the old import paths for backwards compatibility. Args: modules (dict, optional): A dictionary mapping old module paths to new module paths. attributes (dict, optional): A dictionary mapping old module attributes to new module attributes. Examples: >>> with temporary_modules({"old.module": "new.module"}, {"old.module.attribute": "new.module.attribute"}): >>> import old.module # this will now import new.module >>> from old.module import attribute # this will now import new.module.attribute Note: The changes are only in effect inside the context manager and are undone once the context manager exits. Be aware that directly manipulating `sys.modules` can lead to unpredictable results, especially in larger applications or libraries. Use this function with caution. """ if modules is None: modules = {} if attributes is None: attributes = {} import sys from importlib import import_module try: # Set attributes in sys.modules under their old name for old, new in attributes.items(): old_module, old_attr = old.rsplit(".", 1) new_module, new_attr = new.rsplit(".", 1) setattr(import_module(old_module), old_attr, getattr(import_module(new_module), new_attr)) # Set modules in sys.modules under their old name for old, new in modules.items(): sys.modules[old] = import_module(new) yield finally: # Remove the temporary module paths for old in modules: if old in sys.modules: del sys.modules[old]

class SafeClass:

"""A placeholder class to replace unknown classes during unpickling."""

textdef __init__(self, *args, **kwargs): """Initialize SafeClass instance, ignoring all arguments.""" pass def __call__(self, *args, **kwargs): """Run SafeClass instance, ignoring all arguments.""" pass

class SafeUnpickler(pickle.Unpickler):

"""Custom Unpickler that replaces unknown classes with SafeClass."""

textdef find_class(self, module, name): """ Attempt to find a class, returning SafeClass if not among safe modules. Args: module (str): Module name. name (str): Class name. Returns: (type): Found class or SafeClass. """ safe_modules = ( "torch", "collections", "collections.abc", "builtins", "math", "numpy", # Add other modules considered safe ) if module in safe_modules: return super().find_class(module, name) else: return SafeClass

def torch_safe_load(weight, safe_only=False):

"""

Attempts to load a PyTorch model with the torch.load() function. If a ModuleNotFoundError is raised, it catches the

error, logs a warning message, and attempts to install the missing module via the check_requirements() function.

After installation, the function again attempts to load the model using torch.load().

textArgs: weight (str): The file path of the PyTorch model. safe_only (bool): If True, replace unknown classes with SafeClass during loading. Returns: ckpt (dict): The loaded model checkpoint. file (str): The loaded filename. Examples: >>> from ultralytics.nn.tasks import torch_safe_load >>> ckpt, file = torch_safe_load("path/to/best.pt", safe_only=True) """ from ultralytics.utils.downloads import attempt_download_asset check_suffix(file=weight, suffix=".pt") file = attempt_download_asset(weight) # search online if missing locally try: with temporary_modules( modules={ "ultralytics.yolo.utils": "ultralytics.utils", "ultralytics.yolo.v8": "ultralytics.models.yolo", "ultralytics.yolo.data": "ultralytics.data", }, attributes={ "ultralytics.nn.modules.block.Silence": "torch.nn.Identity", # YOLOv9e "ultralytics.nn.tasks.YOLOv10DetectionModel": "ultralytics.nn.tasks.DetectionModel", # YOLOv10 "ultralytics.utils.loss.v10DetectLoss": "ultralytics.utils.loss.E2EDetectLoss", # YOLOv10 }, ): if safe_only: # Load via custom pickle module safe_pickle = types.ModuleType("safe_pickle") safe_pickle.Unpickler = SafeUnpickler safe_pickle.load = lambda file_obj: SafeUnpickler(file_obj).load() with open(file, "rb") as f: ckpt = torch.load(f, pickle_module=safe_pickle) else: ckpt = torch.load(file, map_location="cpu") except ModuleNotFoundError as e: # e.name is missing module name if e.name == "models": raise TypeError( emojis( f"ERROR ❌️ {weight} appears to be an Ultralytics YOLOv5 model originally trained " f"with https://github.com/ultralytics/yolov5.\nThis model is NOT forwards compatible with " f"YOLOv8 at https://github.com/ultralytics/ultralytics." f"\nRecommend fixes are to train a new model using the latest 'ultralytics' package or to " f"run a command with an official Ultralytics model, i.e. 'yolo predict model=yolo11n.pt'" ) ) from e LOGGER.warning( f"{weight} appears to require '{e.name}', which is not in Ultralytics requirements." f"\nAutoInstall will run now for '{e.name}' but this feature will be removed in the future." f"\nRecommend fixes are to train a new model using the latest 'ultralytics' package or to " f"run a command with an official Ultralytics model, i.e. 'yolo predict model=yolo11n.pt'" ) check_requirements(e.name) # install missing module ckpt = torch.load(file, map_location="cpu") if not isinstance(ckpt, dict): # File is likely a YOLO instance saved with i.e. torch.save(model, "saved_model.pt") LOGGER.warning( f"The file '{weight}' appears to be improperly saved or formatted. " f"For optimal results, use model.save('filename.pt') to correctly save YOLO models." ) ckpt = {"model": ckpt.model} return ckpt, file

def attempt_load_weights(weights, device=None, inplace=True, fuse=False):

"""

Load an ensemble of models weights=[a,b,c] or a single model weights=[a] or weights=a.

textArgs: weights (str | List[str]): Model weights path(s). device (torch.device, optional): Device to load model to. inplace (bool): Whether to do inplace operations. fuse (bool): Whether to fuse model. Returns: (torch.nn.Module): Loaded model. """ ensemble = Ensemble() for w in weights if isinstance(weights, list) else [weights]: ckpt, w = torch_safe_load(w) # load ckpt args = {**DEFAULT_CFG_DICT, **ckpt["train_args"]} if "train_args" in ckpt else None # combined args model = (ckpt.get("ema") or ckpt["model"]).to(device).float() # FP32 model # Model compatibility updates model.args = args # attach args to model model.pt_path = w # attach *.pt file path to model model.task = guess_model_task(model) if not hasattr(model, "stride"): model.stride = torch.tensor([32.0]) # Append ensemble.append(model.fuse().eval() if fuse and hasattr(model, "fuse") else model.eval()) # model in eval mode # Module updates for m in ensemble.modules(): if hasattr(m, "inplace"): m.inplace = inplace elif isinstance(m, torch.nn.Upsample) and not hasattr(m, "recompute_scale_factor"): m.recompute_scale_factor = None # torch 1.11.0 compatibility # Return model if len(ensemble) == 1: return ensemble[-1] # Return ensemble LOGGER.info(f"Ensemble created with {weights}\n") for k in "names", "nc", "yaml": setattr(ensemble, k, getattr(ensemble[0], k)) ensemble.stride = ensemble[int(torch.argmax(torch.tensor([m.stride.max() for m in ensemble])))].stride assert all(ensemble[0].nc == m.nc for m in ensemble), f"Models differ in class counts {[m.nc for m in ensemble]}" return ensemble

def attempt_load_one_weight(weight, device=None, inplace=True, fuse=False):

"""

Load a single model weights.

textArgs: weight (str): Model weight path. device (torch.device, optional): Device to load model to. inplace (bool): Whether to do inplace operations. fuse (bool): Whether to fuse model. Returns: (tuple): Tuple containing the model and checkpoint. """ ckpt, weight = torch_safe_load(weight) # load ckpt args = {**DEFAULT_CFG_DICT, **(ckpt.get("train_args", {}))} # combine model and default args, preferring model args model = (ckpt.get("ema") or ckpt["model"]).to(device).float() # FP32 model # Model compatibility updates model.args = {k: v for k, v in args.items() if k in DEFAULT_CFG_KEYS} # attach args to model model.pt_path = weight # attach *.pt file path to model model.task = guess_model_task(model) if not hasattr(model, "stride"): model.stride = torch.tensor([32.0]) model = model.fuse().eval() if fuse and hasattr(model, "fuse") else model.eval() # model in eval mode # Module updates for m in model.modules(): if hasattr(m, "inplace"): m.inplace = inplace elif isinstance(m, torch.nn.Upsample) and not hasattr(m, "recompute_scale_factor"): m.recompute_scale_factor = None # torch 1.11.0 compatibility # Return model and ckpt return model, ckpt

def parse_model(d, ch, verbose=True): # model_dict, input_channels(3)

"""

Parse a YOLO model.yaml dictionary into a PyTorch model.

textArgs: d (dict): Model dictionary. ch (int): Input channels. verbose (bool): Whether to print model details. Returns: (tuple): Tuple containing the PyTorch model and sorted list of output layers. """ import ast # Args legacy = True # backward compatibility for v3/v5/v8/v9 models max_channels = float("inf") nc, act, scales = (d.get(x) for x in ("nc", "activation", "scales")) depth, width, kpt_shape = (d.get(x, 1.0) for x in ("depth_multiple", "width_multiple", "kpt_shape")) if scales: scale = d.get("scale") if not scale: scale = tuple(scales.keys())[0] LOGGER.warning(f"no model scale passed. Assuming scale='{scale}'.") depth, width, max_channels = scales[scale] if act: Conv.default_act = eval(act) # redefine default activation, i.e. Conv.default_act = torch.nn.SiLU() if verbose: LOGGER.info(f"{colorstr('activation:')} {act}") # print if verbose: LOGGER.info(f"\n{'':>3}{'from':>20}{'n':>3}{'params':>10} {'module':<45}{'arguments':<30}") ch = [ch] layers, save, c2 = [], [], ch[-1] # layers, savelist, ch out base_modules = frozenset( { Classify, Conv, CBAM, ConvTranspose, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, C2fPSA, C2PSA, DWConv, Focus, BottleneckCSP, C1, C2, C2f, C3k2, RepNCSPELAN4, ELAN1, ADown, AConv, SPPELAN, C2fAttn, C3, C3TR, C3Ghost, torch.nn.ConvTranspose2d, DWConvTranspose2d, C3x, RepC3, PSA, SCDown, C2fCIB, A2C2f, } ) repeat_modules = frozenset( # modules with 'repeat' arguments { BottleneckCSP, C1, C2, C2f, C3k2, C2fAttn, C3, C3TR, C3Ghost, C3x, RepC3, C2fPSA, C2fCIB, C2PSA, A2C2f, } ) for i, (f, n, m, args) in enumerate(d["backbone"] + d["head"]): # from, number, module, args m = ( getattr(torch.nn, m[3:]) if "nn." in m else getattr(__import__("torchvision").ops, m[16:]) if "torchvision.ops." in m else globals()[m] ) # get module for j, a in enumerate(args): if isinstance(a, str): with contextlib.suppress(ValueError): args[j] = locals()[a] if a in locals() else ast.literal_eval(a) n = n_ = max(round(n * depth), 1) if n > 1 else n # depth gain if m in base_modules: c1, c2 = ch[f], args[0] if c2 != nc: # if c2 not equal to number of classes (i.e. for Classify() output) c2 = make_divisible(min(c2, max_channels) * width, 8) if m is C2fAttn: # set 1) embed channels and 2) num heads args[1] = make_divisible(min(args[1], max_channels // 2) * width, 8) args[2] = int(max(round(min(args[2], max_channels // 2 // 32)) * width, 1) if args[2] > 1 else args[2]) if m is CBAM: args = [c1, *args[1:]] args = [c1, c2, *args[1:]] if m in repeat_modules: args.insert(2, n) # number of repeats n = 1 if m is C3k2: # for M/L/X sizes legacy = False if scale in "mlx": args[3] = True if m is A2C2f: legacy = False if scale in "lx": # for L/X sizes args.extend((True, 1.2)) if m is C2fCIB: legacy = False elif m is AIFI: args = [ch[f], *args] elif m in frozenset({HGStem, HGBlock}): c1, cm, c2 = ch[f], args[0], args[1] args = [c1, cm, c2, *args[2:]] if m is HGBlock: args.insert(4, n) # number of repeats n = 1 elif m is ResNetLayer: c2 = args[1] if args[3] else args[1] * 4 elif m is torch.nn.BatchNorm2d: args = [ch[f]] elif m is Concat: c2 = sum(ch[x] for x in f) elif m in frozenset( {Detect, WorldDetect, YOLOEDetect, Segment, YOLOESegment, Pose, OBB, ImagePoolingAttn, v10Detect} ): args.append([ch[x] for x in f]) if m is Segment or m is YOLOESegment: args[2] = make_divisible(min(args[2], max_channels) * width, 8) if m in {Detect, YOLOEDetect, Segment, YOLOESegment, Pose, OBB}: m.legacy = legacy elif m is RTDETRDecoder: # special case, channels arg must be passed in index 1 args.insert(1, [ch[x] for x in f]) elif m is CBLinear: c2 = args[0] c1 = ch[f] args = [c1, c2, *args[1:]] elif m is CBFuse: c2 = ch[f[-1]] elif m in frozenset({TorchVision, Index}): c2 = args[0] c1 = ch[f] args = [*args[1:]] else: c2 = ch[f] m_ = torch.nn.Sequential(*(m(*args) for _ in range(n))) if n > 1 else m(*args) # module t = str(m)[8:-2].replace("__main__.", "") # module type m_.np = sum(x.numel() for x in m_.parameters()) # number params m_.i, m_.f, m_.type = i, f, t # attach index, 'from' index, type if verbose: LOGGER.info(f"{i:>3}{str(f):>20}{n_:>3}{m_.np:10.0f} {t:<45}{str(args):<30}") # print save.extend(x % i for x in ([f] if isinstance(f, int) else f) if x != -1) # append to savelist layers.append(m_) if i == 0: ch = [] ch.append(c2) return torch.nn.Sequential(*layers), sorted(save)

def yaml_model_load(path):

"""

Load a YOLOv8 model from a YAML file.

textArgs: path (str | Path): Path to the YAML file. Returns: (dict): Model dictionary. """ path = Path(path) if path.stem in (f"yolov{d}{x}6" for x in "nsmlx" for d in (5, 8)): new_stem = re.sub(r"(\d+)([nslmx])6(.+)?$", r"\1\2-p6\3", path.stem) LOGGER.warning(f"Ultralytics YOLO P6 models now use -p6 suffix. Renaming {path.stem} to {new_stem}.") path = path.with_name(new_stem + path.suffix) unified_path = re.sub(r"(\d+)([nslmx])(.+)?$", r"\1\3", str(path)) # i.e. yolov8x.yaml -> yolov8.yaml yaml_file = check_yaml(unified_path, hard=False) or check_yaml(path) d = YAML.load(yaml_file) # model dict d["scale"] = guess_model_scale(path) d["yaml_file"] = str(path) return d

def guess_model_scale(model_path):

"""

Extract the size character n, s, m, l, or x of the model's scale from the model path.

textArgs: model_path (str | Path): The path to the YOLO model's YAML file. Returns: (str): The size character of the model's scale (n, s, m, l, or x). """ try: return re.search(r"yolo(e-)?[v]?\d+([nslmx])", Path(model_path).stem).group(2) # noqa except AttributeError: return ""

def guess_model_task(model):

"""

Guess the task of a PyTorch model from its architecture or configuration.

textArgs: model (torch.nn.Module | dict): PyTorch model or model configuration in YAML format. Returns: (str): Task of the model ('detect', 'segment', 'classify', 'pose', 'obb'). """ def cfg2task(cfg): """Guess from YAML dictionary.""" m = cfg["head"][-1][-2].lower() # output module name if m in {"classify", "classifier", "cls", "fc"}: return "classify" if "detect" in m: return "detect" if "segment" in m: return "segment" if m == "pose": return "pose" if m == "obb": return "obb" # Guess from model cfg if isinstance(model, dict): with contextlib.suppress(Exception): return cfg2task(model) # Guess from PyTorch model if isinstance(model, torch.nn.Module): # PyTorch model for x in "model.args", "model.model.args", "model.model.model.args": with contextlib.suppress(Exception): return eval(x)["task"] for x in "model.yaml", "model.model.yaml", "model.model.model.yaml": with contextlib.suppress(Exception): return cfg2task(eval(x)) for m in model.modules(): if isinstance(m, (Segment, YOLOESegment)): return "segment" elif isinstance(m, Classify): return "classify" elif isinstance(m, Pose): return "pose" elif isinstance(m, OBB): return "obb" elif isinstance(m, (Detect, WorldDetect, YOLOEDetect, v10Detect)): return "detect" # Guess from model filename if isinstance(model, (str, Path)): model = Path(model) if "-seg" in model.stem or "segment" in model.parts: return "segment" elif "-cls" in model.stem or "classify" in model.parts: return "classify" elif "-pose" in model.stem or "pose" in model.parts: return "pose" elif "-obb" in model.stem or "obb" in model.parts: return "obb" elif "detect" in model.parts: return "detect" # Unable to determine task from model LOGGER.warning( "Unable to automatically guess model task, assuming 'task=detect'. " "Explicitly define task for your model, i.e. 'task=detect', 'segment', 'classify','pose' or 'obb'." ) return "detect" # assume detect

代码现在报错如下:(Yolov11) jd@jd-x11dai-n:/media/jd/4997BB1603CFE2C4/lw/ultralytic$ python train.py

WARNING ⚠️ no model scale passed. Assuming scale='n'.

Traceback (most recent call last):

File "train.py", line 17, in <module>

model = YOLO(model='ultralytics/cfg/models/11/yolo11_cbam.yaml')

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/models/yolo/model.py", line 53, in init

super().init(model=model, task=task, verbose=verbose)

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/engine/model.py", line 146, in init

self._new(model, task=task, verbose=verbose)

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/engine/model.py", line 258, in _new

self.model = (model or self.smart_load("model"))(cfg_dict, verbose=verbose and RANK == -1) # build model

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/nn/tasks.py", line 336, in init

self.model, self.save = parse_model(deepcopy(self.yaml), ch=ch, verbose=verbose) # model, savelist

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/nn/tasks.py", line 1498, in parse_model

m = torch.nn.Sequential(*(m(*args) for _ in range(n))) if n > 1 else m(*args) # module

TypeError: init() takes from 2 to 3 positional arguments but 4 were given

如何解决呢

pythonfrom PIL import Image import matplotlib.pyplot as plt img = Image.open('/mnt/data/image.png') plt.imshow(img); plt.axis('off') (-0.5, 1626.5, 390.5, -0.5)

pythonfrom PIL import Image import matplotlib.pyplot as plt img = Image.open('/mnt/data/image.png') plt.imshow(img); plt.axis('off') (-0.5, 1626.5, 390.5, -0.5)

(-0.5, 1626.5, 390.5, -0.5)

text

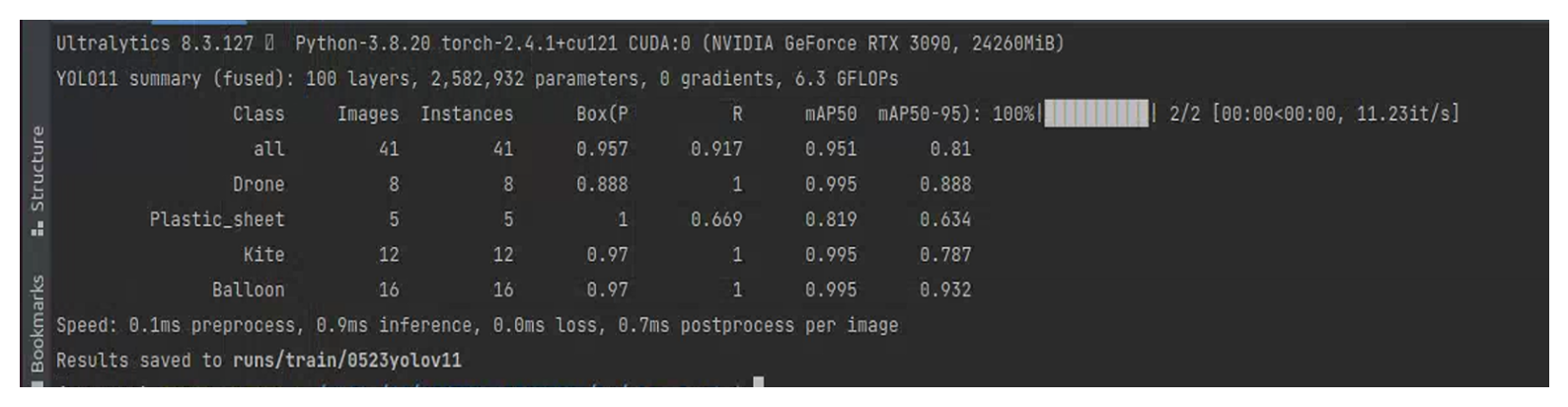

从你截屏的验证集统计来看(共 41 张验证图片,4 类):

| 类别 | R(召回) | mAP50 | mAP50-95 | 备注 |

|---|---|---|---|---|

| Drone | 1.00 | 0.995 | 0.888 | 表现非常好 |

| Plastic_sheet | 0.669 | 0.819 | 0.634 | 明显短板 |

| Kite | 1.00 | 0.995 | 0.787 | 轻微可提升 |

| Balloon | 1.00 | 0.995 | 0.932 | 稳定 |

TypeError: __init__() takes from 2 to 3 positional arguments but 4 were given问题出在 CBAM 模块的参数被多传了一个 c2。

Ultralytics 的 parse_model() 会自动把 [c1, c2, …] 拼进参数里;而 CBAM 只需要 [in_channels, kernel_size]。

| 方案 | 操作 | 解释 |

|---|---|---|

| A. 改 yaml | 把 CBAM 那行改成<br/>```yaml | - [-1, 1, CBAM, [7]]``` |

| B. 改 parse_model | 在 if m is CBAM: 处,把<br/>python args = [c1, *args[1:]]<br/>改成<br/>python args = [c1] + args[2:] | 彻底根治多余 c2 传参的问题 |

目标:在极小数据量下进一步提升 泛化 ,尤其是对“塑料薄膜”类。

| 方向 | 建议/插件 | 一句话要点 |

|---|---|---|

| 迁移学习 | 1. 冻结 backbone(–freeze 10 ~ 14)<br/>2. 起始权重用 yolo11s.pt 或 yolo11m.pt 在 COCO 预训的权重 | 让小数据只调头部,降低过拟合 |

| 数据增强 | - Mosaic + MixUp(Ultralytics 默认)<br/>- Copy-Paste:把稀有类别糊到背景上<br/>- HSV、仿射、RandomErasing | “虚增”稀有类样本数,提高 recall |

| 损失函数 | - Focal Loss γ=1.5 ~ 2(内置 fl_gamma)<br/>- Class-Balanced Loss(CB-Loss) | 减少长尾类别梯度被主类淹没 |

| 注意力插件 | - ECA 或 SE:在 C3k2 里替换/并联<br/>- 轻量 ASPP:对 P5 施加空洞卷积 | 强化小目标 & 细纹理感知 |

| 特征金字塔 | - 在 head 处再加一支 P2 (stride 4) 分支 -> 检测极小异物 | 适用于 drone+balloon 类微型目标 |

| 解耦头 | 把 Detect head 改为 Decoupled-Head(cls/box 分离) | 收敛更快,少样本常见做法 |

| 正样本采样 | Task-Aligned Assigner (TAL)<br/>YOLOv11 已内置;可把 ta_gamma 提高到 1.0-2.0 | 对小类赋更高正样本权重 |

| 增量蒸馏 | 用 COCO 预训 yolo11m 做 Teacher,采用 Detection KD(ultralytics 支持) | 用大网络的软标签稳定训练 |

| 优化器 / 训练策略 | - lr0 调小到 1e-4,cosine schedule<br/>- epochs 保持 100~150,但 每 20 epochs 早停 | 防止后期过拟合,留泛化 |

bash# 1. 修正 yaml(假设保存为 yolo11_cbam_fix.yaml) # 2. 冻结前 12 层,使用 focal loss, copy-paste, 早停 yolo detect train \ model=yolo11_cbam_fix.yaml \ data=data.yaml \ epochs=120 \ imgsz=640 \ batch=16 \ freeze=12 \ fl_gamma=1.5 \ mosaic=1.0 mixup=0.2 copy_paste=0.35 \ patience=20 \ lr0=1e-4 \ pretrained=yolo11s.pt

head: 段新增层并把 Detect 换成 DetectDecouple 即可,基本做到 plug-and-play。scale=s)。祝实验顺利,若还有其他报错或想要具体代码片段,随时告诉我!

请先给出代码报错的解决方案

下面给出两种最常见、也最安全的 “一行改动”方案——任选其一即可解决

TypeError: __init__() takes from 2 to 3 positional arguments but 4 were given

(根源:CBAM 只需要 in_channels 和可选的 kernel_size,却被多塞进了 out_channels)

ultralytics/cfg/models/11/yolo11_cbam.yamlyaml- [-1, 1, CBAM, [1024, 7]]

改成

yaml- [-1, 1, CBAM, [7]] # 仅保留 kernel_size,或直接用空列表 []

解释:

parse_model()会自动把当前层的输入通道数c1填到参数列表最前面。- 你 yaml 里再写

1024(其实是c1)就会造成 “3 个实参 + self = 4” 的冲突。- 留

[7](或[],让 CBAM 使用默认 7)即可。

保存后重新运行:

bashpython train.py --model ultralytics/cfg/models/11/yolo11_cbam.yaml

parse_model()(根治所有 CBAM 重复传参)ultralytics/nn/tasks.pyparse_model() 里专门处理 CBAM 的片段:pythonif m is CBAM: args = [c1, *args] # 原来这行会把 “c1 + 整个 args” 一起塞进去

pythonif m is CBAM: args = [c1] + args[1:] # 仅保留 kernel_size,丢掉多余的 out_channels

1024。args[0](通常就是 out_channels),因此同样适配其它自定义 yaml。如果你后面还想在不同 yaml 里多次插 CBAM,推荐用 方案 B(一次修改,永久生效)。

完成其中任意一步后,再运行 python train.py 就不会再出现上述 TypeError。如还有其它报错,随时贴出来!

按照方案B更改后代码还是报错(Yolov11) jd@jd-x11dai-n:/media/jd/4997BB1603CFE2C4/lw/ultralytic python train.py

WARNING ⚠️ no model scale passed. Assuming scale='n'.

Traceback (most recent call last):

File "train.py", line 17, in <module>

model = YOLO(model='ultralytics/cfg/models/11/yolo11_cbam.yaml')

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/models/yolo/model.py", line 53, in __init__

super().__init__(model=model, task=task, verbose=verbose)

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/engine/model.py", line 146, in __init__

self._new(model, task=task, verbose=verbose)

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/engine/model.py", line 258, in _new

self.model = (model or self._smart_load("model"))(cfg_dict, verbose=verbose and RANK == -1) # build model

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/nn/tasks.py", line 336, in __init__

self.model, self.save = parse_model(deepcopy(self.yaml), ch=ch, verbose=verbose) # model, savelist

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/nn/tasks.py", line 1499, in parse_model

m_ = torch.nn.Sequential(*(m(*args) for _ in range(n))) if n > 1 else m(*args) # module

TypeError: __init__() takes from 2 to 3 positional arguments but 4 were given

(Yolov11) jd@jd-x11dai-n:/media/jd/4997BB1603CFE2C4/lw/ultralytic python train.py

WARNING ⚠️ no model scale passed. Assuming scale='n'.

Traceback (most recent call last):

File "train.py", line 17, in <module>

model = YOLO(model='ultralytics/cfg/models/11/yolo11_cbam.yaml')

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/models/yolo/model.py", line 53, in init

super().init(model=model, task=task, verbose=verbose)

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/engine/model.py", line 146, in init

self._new(model, task=task, verbose=verbose)

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/engine/model.py", line 258, in _new

self.model = (model or self.smart_load("model"))(cfg_dict, verbose=verbose and RANK == -1) # build model

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/nn/tasks.py", line 336, in init

self.model, self.save = parse_model(deepcopy(self.yaml), ch=ch, verbose=verbose) # model, savelist

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/nn/tasks.py", line 1498, in parse_model

m = torch.nn.Sequential(*(m(*args) for _ in range(n))) if n > 1 else m(args) # module

TypeError: init() takes from 2 to 3 positional arguments but 4 were given

(Yolov11) jd@jd-x11dai-n:/media/jd/4997BB1603CFE2C4/lw/ultralytic$ python train.py

WARNING ⚠️ no model scale passed. Assuming scale='n'.

Traceback (most recent call last):

File "train.py", line 17, in <module>

model = YOLO(model='ultralytics/cfg/models/11/yolo11_cbam.yaml')

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/models/yolo/model.py", line 53, in init

super().init(model=model, task=task, verbose=verbose)

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/engine/model.py", line 146, in init

self._new(model, task=task, verbose=verbose)

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/engine/model.py", line 258, in _new

self.model = (model or self.smart_load("model"))(cfg_dict, verbose=verbose and RANK == -1) # build model

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/nn/tasks.py", line 336, in init

self.model, self.save = parse_model(deepcopy(self.yaml), ch=ch, verbose=verbose) # model, savelist

File "/media/jd/4997BB1603CFE2C4/lw/ultralytic/ultralytics/nn/tasks.py", line 1498, in parse_model

m = torch.nn.Sequential((m(*args) for _ in range(n))) if n > 1 else m(*args) # module

TypeError: init() takes from 2 to 3 positional arguments but 4 were given

报错仍然出现,说明真正被 Python 解释器加载的 parse_model() 并没有用到你刚才改动的那一行——要么改错了文件,要么环境里还有 另一份 同名源码。下面给出系统化定位 + 终极兜底两种方法,任选其一即可让程序跑起来。

目标:让我们 100 % 确认 正在执行 的

parse_model()代码段。

parse_model() 里插一句日志

pythonif m is CBAM: print("### parse_model sees CBAM, args =", args) # ← 加这一行 args = [c1] + args[2:] # <— 你希望的写法,只保留 c1

bashpython train.py

### parse_model sees CBAM …,说明你改的并不是正在被 import 的那份文件。pip show ultralytics 看看真正安装路径,再去改那里的 tasks.py。args 仍然包含 1024,说明改行写错了——请确认是

(pythonargs = [c1] + args[2:]

args[2:] 而不是 args[1:])。✅ 只要 日志能打印 且

args里 看不到 1024,这条 CBAM 就不会再抛 “4 个参数” 的错。

| 方案 | 操作 | 适用场景 |

|---|---|---|

| A. YAML 一劳永逸 | 把 CBAM 那行改成<br/>yaml<br/>- [-1, 1, CBAM, []] # 或 [-1,1,CBAM,[7]]<br/> | 不想再动源码 / 多环境 |

| B. 让 CBAM 忽略多余参数 | 在 CBAM 类里改成:<br/>python<br/>class CBAM(nn.Module):<br/> def __init__(self, c1, kernel_size=7, *_, **__):<br/> ...<br/> | 日后可能还会在 YAML 里写错参数,希望模型也能跑 |